By now most marketers are familiar with the process of experimentation, identify a hypothesis, design a test that splits the population across one or more variants and select a winning variation based on a success metric. This “winner” has a heavy responsibility – we’re assuming that it confers the improvement in revenue and conversion that we measured during the experiment.

The experiments that you run have to result in better decisions, and ultimately ROI. Further down we’ll look at a situations where an external validity threat in the form of a separate campaign would have invalidated the results of a traditional A/B test. In addition, I’ll show how we were able to adjust and even exploit this external factor using a predictive optimization approach which resulted in a Customer Lifetime Value (LTV) increase of almost 70%.

Where are these threats in marketing?

We sometimes think of our buyer’s context as something static and in our control, but the reality is that the context is constantly changing due to external forces. These changes can affect the validity of the conclusions we’re finding from our past and current experiments.

Here are a few examples of external circumstances that can impact our “static” buyer’s context:

- changes in your marketing campaign mix or channel distribution

- seasonality around the calendar (e.g. holidays) or industry events

- changes in product, pricing or bundling

- content & website or other experience updates

- competitor & partner announcements

- marketing trends

- changes in the business cycle or regulatory climate

Trying to generalize experiment results while the underlying context is changing can be like trying to hit a rapidly moving target. In order to accommodate this shifting context, you must take these factors into consideration in your tests and data analysis, then constantly test and re-test your assumptions.

This is pretty tedious and a lot of work however, which is why most of us don’t do it.

What if there was a way to automatically adjust and react to changing circumstances and buyer behavior?

You can’t control the future, but you can respond better to change

There’s an important difference between what scientists are doing with their experiments and what we’re trying to accomplish in marketing. There’s no way that a scientist is going to be able to reach every single potential patient in the same controlled way that they’re able to in the lab. So they’re bound by the need to generalize and extrapolate results and learnings as best they can to the general population.

In marketing, although the customer context may change, we have access to every single future customer in the same manner that we do within the experiment. If you’re running a website, email or ad A/B test, rolling it out to the rest of the population doesn’t present any significant challenges.

With a typical experiment to fully exploit the learnings you hardcode the best performing experience – which also locks in the contextual assumptions you made during the experiment . If however, you can continuously adjust for changing circumstances and deliver the most effective experience using the most recent information available then your marketing can be much more agile.

Theoretically, you could make these adjustments by continuously testing and retesting variations using only the most recent data points to inform your decision. But again, that could end up being a lot of work especially considering you might have to implement new winners in the future.

Contextual Optimization In Action

Our team at FunnelEnvy helps customers optimize web experiences for revenue. We were recently working with the team at InVision to use a predictive model to automatically decide which variation to show based on recent data.

To accomplish this we used our Predictive Revenue Optimization platform which is similar to the contextual bandit approach to optimization has that been effective at companies like Zillow, Microsoft and StitchFix. By integrating context about a site visitor along with outcomes (conversions) contextual bandits select experiences for individual visitors to maximize the probability of that outcome. Unlike traditional a/b testing or rules-based personalization they’re far more scalable, make decisions on a 1:1 basis, respond to change automatically and can incorporate multiple conversion types (e.g. down funnel or recurring revenue outcomes).

In this case we were running an experiment trying to improve their upgrade experience.

As with any freemium business model, the conversion rate from the free to paid plan is heavily scrutinized and subject to continuous testing and optimization. For InVision, the free plan has been immensely successful at familiarizing users with the product. Invision also offers a number of paid tiers with different features and price points.

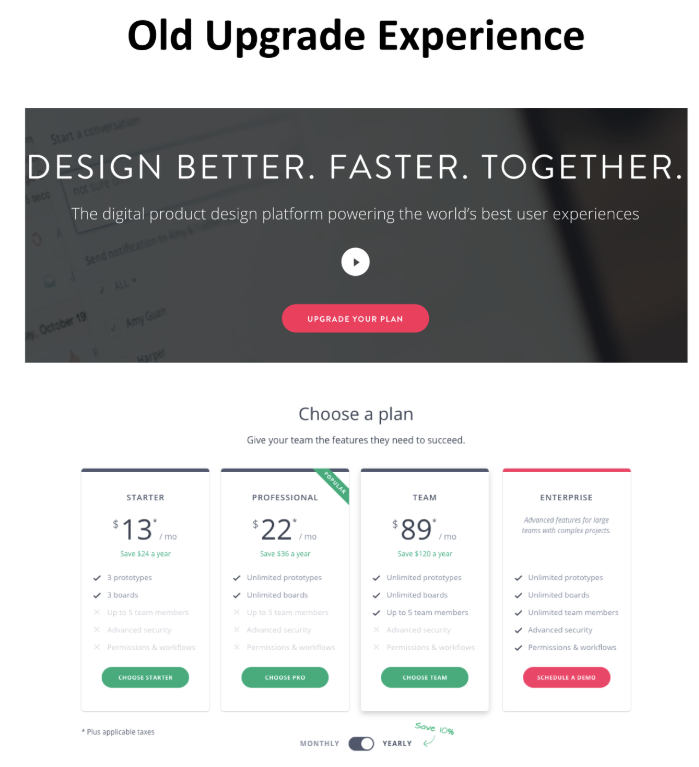

During our analysis we noticed a lot of traffic from free plan users was landing on the home page. When those visitors arrived, InVision showed them a Call to Action (CTA) to “Upgrade your Plan”. When the visitor clicked on this CTA, they were taken to the page of the various premium plans, giving them the option to enter their payment information after they made a selection.

This is a relatively standard SaaS upgrade experience, and we wanted to test to find if there was any room for improvement. We noticed the the home page click through rate was relatively low, and we reasoned that simply having an “Upgrade Your Plan” CTA without a clearer articulation of the benefits that come with upgraded plans wasn’t compelling enough to get users to click.

So we came up with a hypothesis: If we present visitors with a streamlined upgrade process to the best plan for them we will see an increase in average Customer Lifetime Value from paid upgrades.

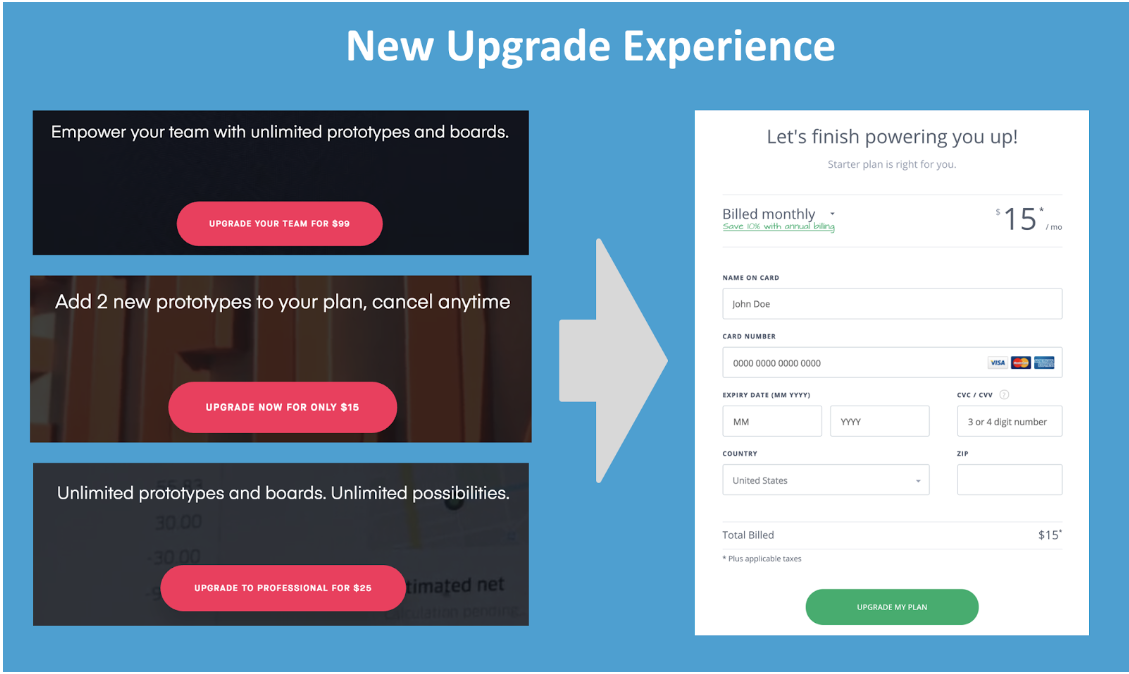

So we created three different variations of the upgrade experience, one for each of the paid plans. In each variation, we presented the visitor with the specific benefits of a plan with the upgrade price directly on the CTA. This allowed us to then skip the plan selection step and go directly into the upgrade flow (enter credit card info).

But our hypothesis says we want to show “the best plan” for each visitor. How do we go about determining that?

In this situation a single variation is unlikely to be optimal for all visitors, since free plan users are going to have different reasons for upgrading and will likely prefer different plans. So running a randomized experiment with the goal of picking a “winning” variation doesn’t make much sense.

What if instead we used context about the visitor and could show them the experience that was most likely to maximize revenue? We established this context using behavioral and firmographic data from an enrichment provider (Clearbit) and looked at as past outcomes to predict in real-time the “best” experience for each visitor. The “best” experience isn’t necessarily the one that’s most likely to convert, since each of these plans have significantly different revenue implications.

Instead, we predicted the variation that was most likely to maximize Customer Lifetime Value for each individual visitor in real-time and served that experience. Utilizing machine learning we created a rapid feedback loop to ensure our predictions were continuously improving and adapting (more on this below).

To learn how our predictive model fared we ran an experiment.

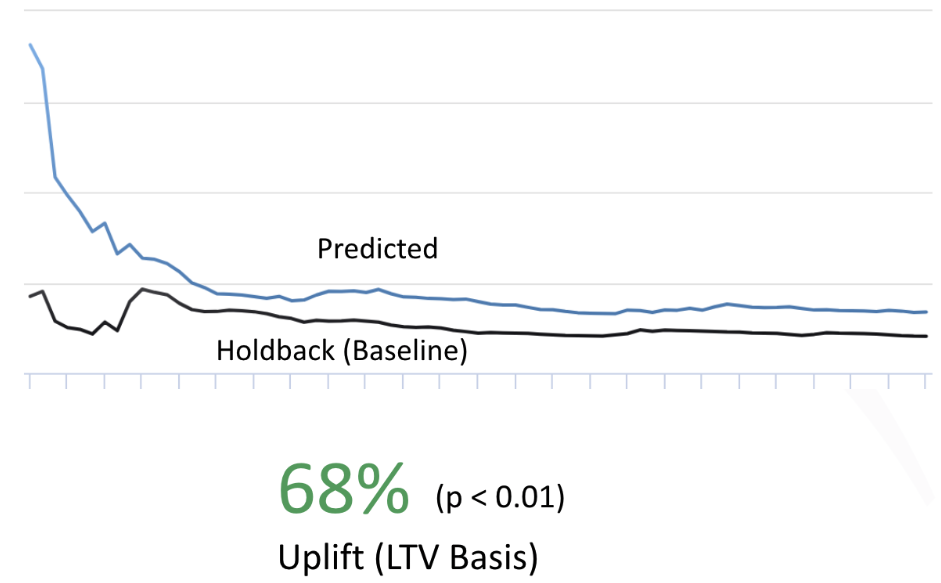

We predicted four variations (baseline and three variations) for half of the traffic (the “predicted” population) and only showed the baseline to the other half (the “holdback” population).

Results

Looking at cumulative results below we see a significant and consistent improvement in the predicted experiences over holdback. Visitors who were subject to the predicted experiences were worth 68% (p < 0.01) more on average than those that saw the control.

Conclusion

The costs associated with generalization aren’t only a function of time. In a randomized experiment, you’re trying to extrapolate (generalize) the single best experience for a population. If that population is diverse (like it is on a website home page) it’s likely that even the best performing variation for the entire population is not the most effective one for certain subgroups. Being able to target these subgroups with better experience on a 1:1 basis should be far more effective than picking a single variation.

It requires a degree of data integration but as we’ve seen with better use of customer context and historical outcomes, predictive optimization can be much more agile and deliver significantly higher return with less manual investment than manual experimentation approaches.